I've been curious about building a WhatsApp bot for a while now—specifically, one that doesn't rely on the official WhatsApp Business API. The official route requires a lengthy approval process that's impractical for hobby projects. Plus, I enjoy a good challenge.

I had previously worked on a project using whatsapp-web.js, so I knew it was possible to interact with WhatsApp programmatically. This time, I wanted to build something more structured: a platform with a proper backend, frontend, and the ability to create multiple bot instances with different behaviors.

I decided to see how much of this I could build with AI assistance—specifically, using claude.ai for architecture and planning, and GitHub Copilot for implementation. This article documents that process: what worked, what didn't, and what I learned about working with AI as a development tool.

The Setup

I wanted to approach this project systematically, so I used two interfaces to the same underlying model, each in a different context:

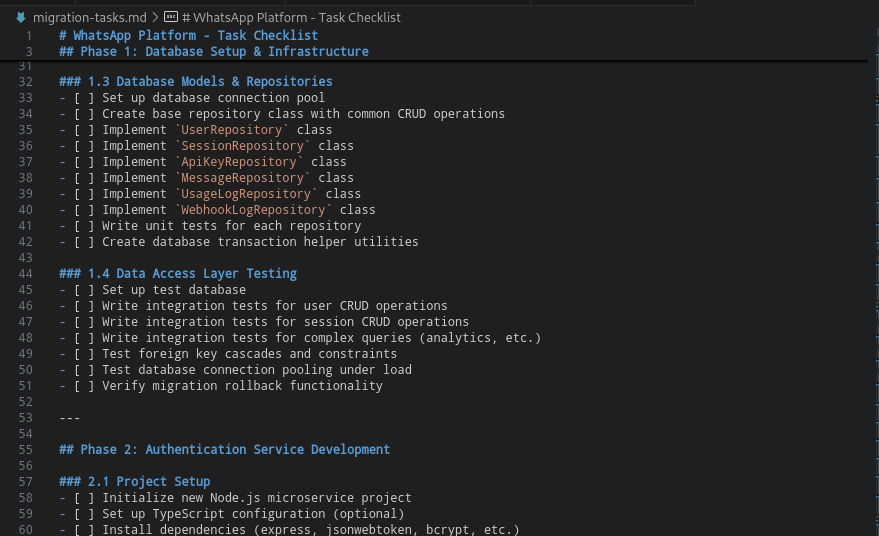

Claude (Sonnet 4) on the browser handled high-level planning and architecture. I used it to generate a Product Requirements Document (PRD) and a detailed task checklist broken down into phases.

GitHub Copilot (Pro trial) for implementation. I configured it in VS Code and my usual JetBrains IDEs to handle the actual code generation. I quickly discovered that Copilot worked much more seamlessly with VS Code than with the JetBrains suite. This led to a workflow where I used VS Code when generating code, then switched to WebStorm and IntelliJ when manually reviewing and refining what was generated.

A teammate at a hackathon had mentioned this approach, and I thought it was worth trying. The idea is simple: use one AI for strategic thinking, another for tactical execution. This way, you're not constantly context-switching between "what should I build" and "how do I build it."

The Process

With the PRD and task checklist in hand, I created a project directory with clear separation of concerns:

- Database migration files

- Frontend code

- Backend code

- WhatsApp service code

- The PRD and task checklist at the root for easy reference

The task checklist was organized into phases for each component. I decided to work through it sequentially, asking Copilot to implement one phase at a time. This became the core of my workflow: pick a phase, prompt Copilot to generate the code, review what it produced, make corrections, then move to the next phase.

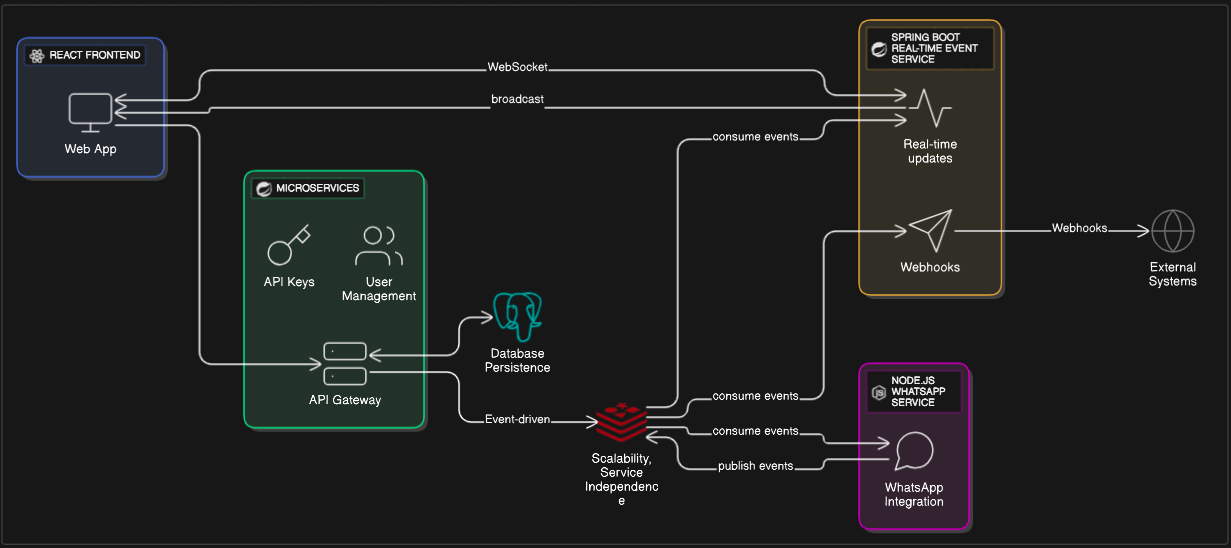

Experimenting with architectural changes

I initially built the project as a monolith, but as I thought about design patterns and scalability, I decided to refactor to an event-driven architecture. This became an interesting test of the AI's capabilities—asking it to refactor an existing architecture rather than just generate new code.

The process started by asking Copilot to compare the original PRD with an updated version and identify what needed to change. It generated a detailed change document and implementation checklist breaking the refactor into manageable phases. The refactor required more careful oversight than the initial implementation, particularly around cross-service data contracts.

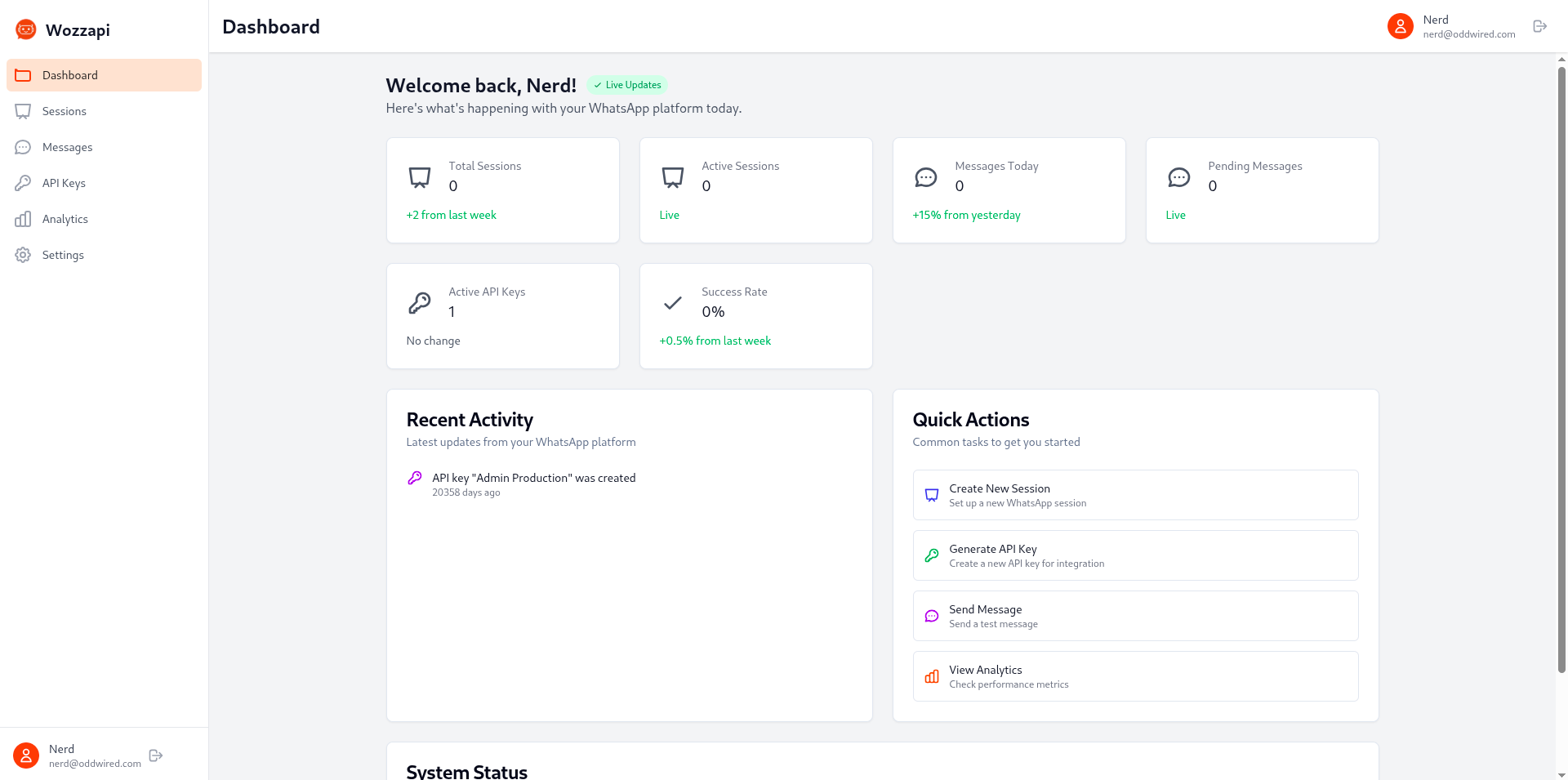

I treated the project as if it were a commercial product. I even asked Copilot to generate a complete landing website with documentation. The goal was to simulate how AI might be used in an organizational setting to build production-ready software.

What Worked Well

Speed of initial implementation

The initial setup—WhatsApp service, backend, frontend, and landing website—was completed in a weekend. For a hobby project worked on outside of a full-time job, this was remarkable. I estimate it would have taken about a month to achieve the same progress without AI assistance.

Accurate code generation with focused context

The AI performed best when given limited, specific context. For example, asking Copilot to "modify this page to behave in a certain way" produced accurate results with few bugs. The more focused the prompt and the smaller the scope, the better the output.

A particularly effective pattern was phase-by-phase implementation. When I asked: "From the implementation checklist document, implement Phase 2", Copilot knew exactly what to do because it had context from the checklist we'd created earlier. This structured approach worked significantly better than asking it to build large features all at once.

Debugging and targeted fixes

One of the biggest surprises was watching Copilot debug issues and edit specific sections of a source file. It wasn't just generating code from scratch—it could identify problems and apply surgical fixes.

For instance, when I encountered a Redis stream serialization error with ISO date strings, I could ask it to "fix the deserialization issue" and it would identify the root cause (JSON serialization mismatch between producer and consumer) and propose a specific solution with proper ObjectMapper configuration.

Strategic planning and documentation

Claude excelled at creating structured planning documents. When I asked it to compare two PRD versions and create implementation plans, it generated comprehensive markdown documents with detailed checklists breaking down work into discrete phases. This gave both me and Copilot clear roadmaps to follow.

Realistic output quality

The landing page and documentation that Copilot generated looked professional and production-ready. The documentation was well-structured and the landing page had appropriate sections for features and use cases.

What Didn't Work

Context size limitations

I noticed a clear pattern: the AI struggled when given too much scope. Asking it to "build a frontend application" from scratch produced code with more bugs and inconsistencies compared to targeted requests like "modify this specific component to add feature X." The sweet spot seemed to be keeping tasks small and contexts limited.

API contract inconsistencies

The most time-consuming bugs were related to API contracts. The data fields expected by the backend often differed from what was implemented on the frontend, or the data returned didn't match exactly what the frontend expected. These mismatches required careful inspection of both sides of the application to identify and correct.

For example, the event structure between the Node.js WhatsApp service and the Java Spring Boot backend didn't align properly. The AI generated models with specific fields at the root level, but the actual events had a data property containing the specific information. This required significant manual restructuring of the event classes.

Iterative debugging required

Some issues needed multiple attempts to resolve. A React infinite re-render loop caused by improper useEffect dependencies took several iterations to fix properly. The AI would fix one aspect but introduce new issues, requiring me to provide increasingly specific guidance about using primitive values instead of object references in dependency arrays.

Similarly, getting WebSocket connections to work properly required multiple rounds of fixes—from auto-connection logic to proper state management to preventing memory leaks.

AI persistence on unnecessary changes

At one point, the AI kept insisting on editing a specific file to add code that wasn't needed. I had to explicitly instruct it not to touch that file. Once an AI decides something should be done a certain way, redirecting it can require very specific prompting.

This also appeared in code reviews where I had to add explicit instructions like "do not modify RedisConfig.java" to prevent unwanted changes during refactoring work.

The debugging reality

While the initial weekend got the foundation in place, I've spent about two hours after work on most days testing and resolving bugs. Most fixes were minor adjustments, but I found myself instructing the AI on how to fix or modify subsequent items based on patterns I'd discovered. The AI gets you far quickly, but it doesn't eliminate the need for manual debugging and refinement.

Type and serialization mismatches

Cross-language projects revealed limitations. The Redis event streaming between Node.js (producer) and Java Spring Boot (consumer) had serialization mismatches that the AI didn't anticipate. The Node.js service used string serialization while the Java consumer expected JSON, requiring manual configuration of serializers and deserializers.

Results & Reflections

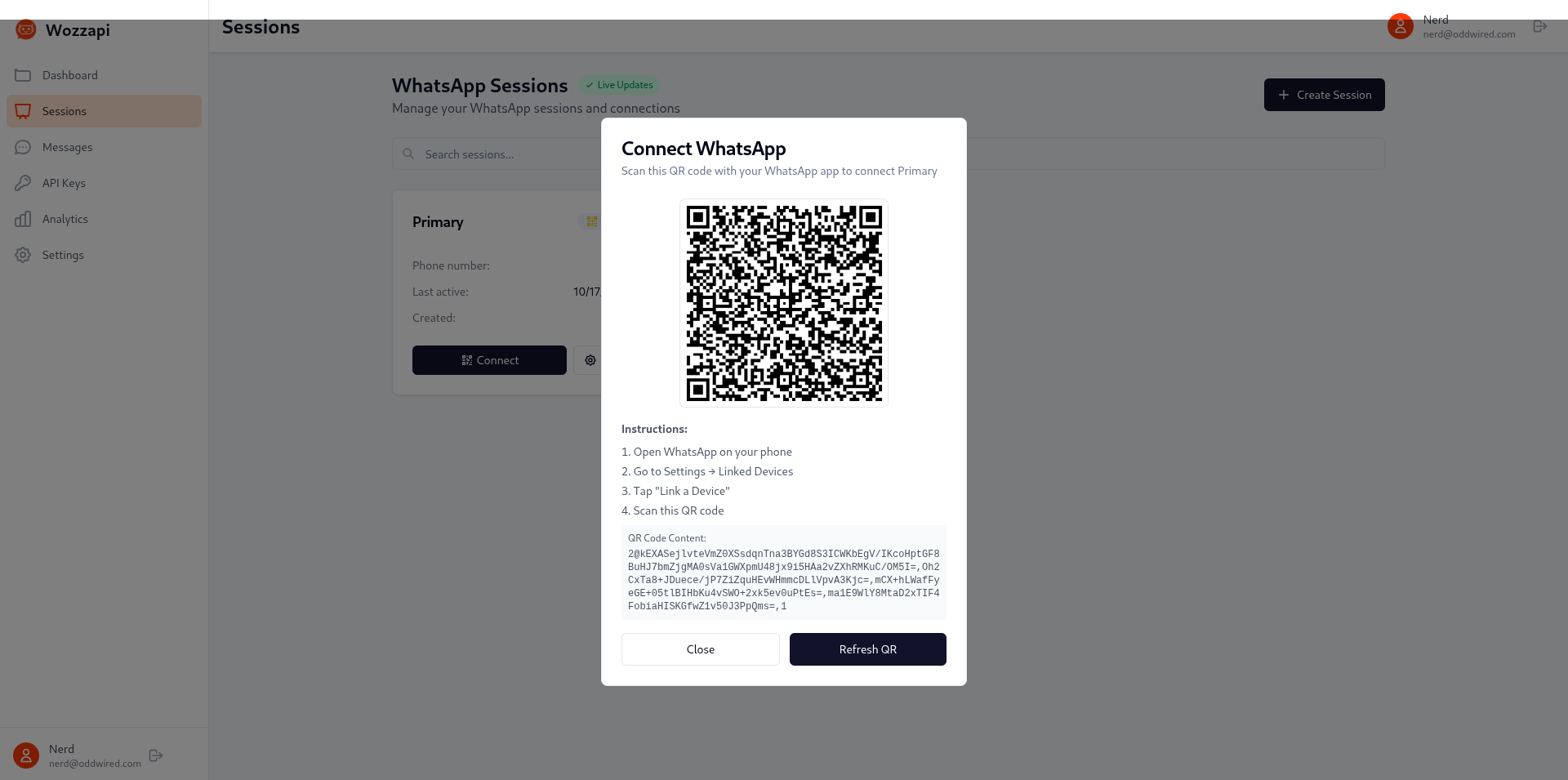

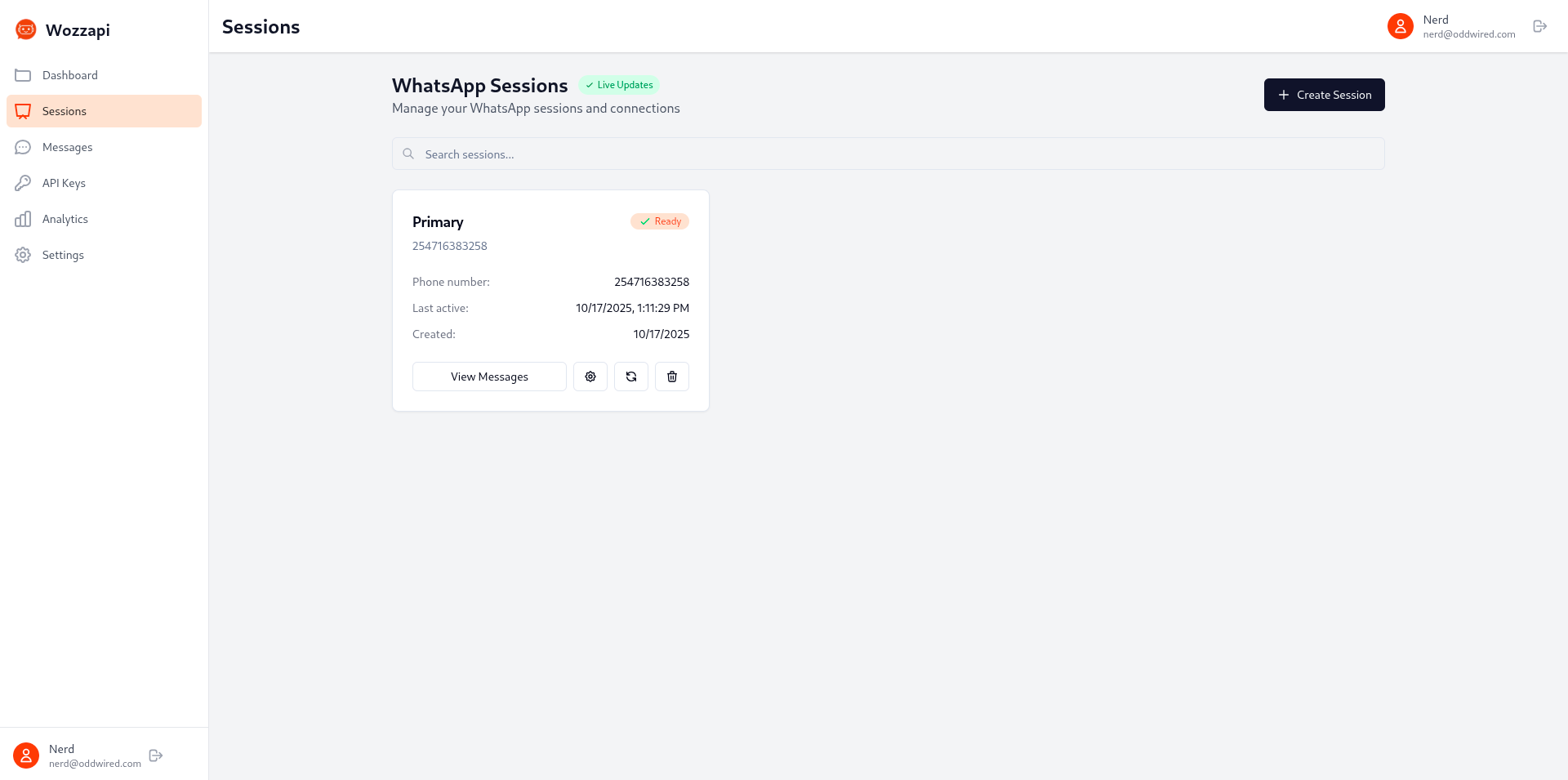

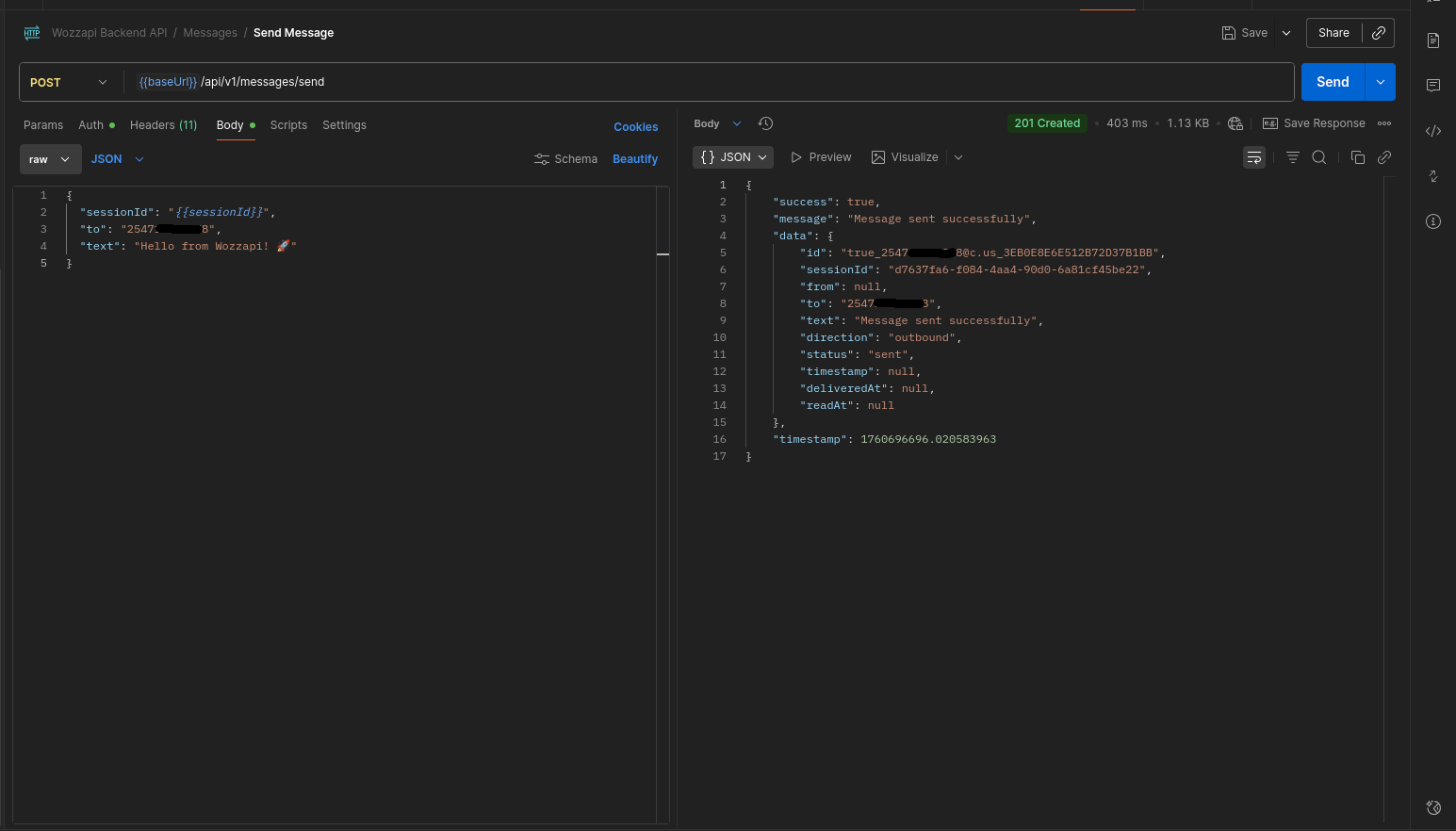

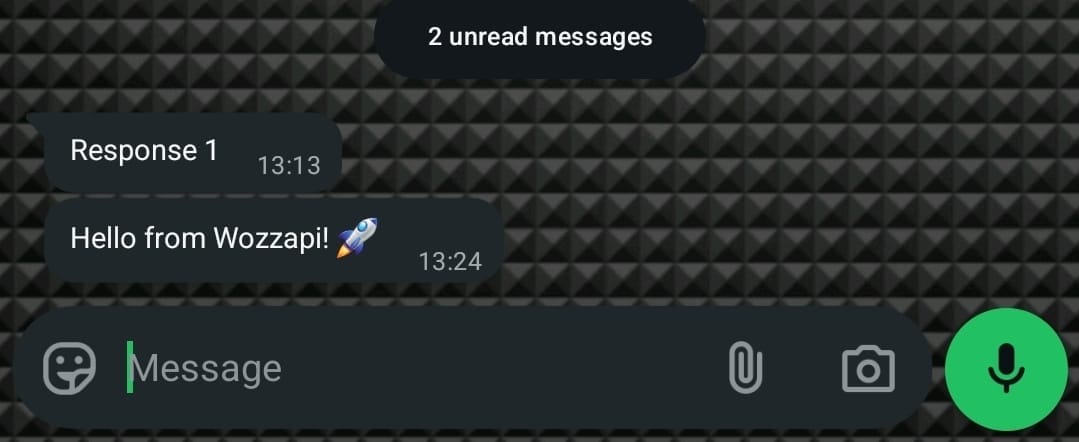

The project is now hosted on my homelab, with the service and landing website running. The core infrastructure is in place: a WhatsApp service that can handle multiple bot instances, a backend API with generic endpoints and callbacks, and a frontend for management.

Key takeaways from this experiment:

AI excels at scaffolding, not architecture: Claude was excellent for creating the PRD and task breakdown. Copilot was great at generating boilerplate and implementing well-defined features. But the architectural decisions and cross-cutting concerns (like API contracts) still required human oversight.

Scope control is critical: The smaller and more focused the task, the better the AI performed. Breaking work into phases was essential. Trying to generate large chunks of functionality at once led to more bugs and inconsistencies.

Review everything: The API contract issues taught me that you can't assume different parts of an AI-generated codebase will align perfectly, even when working from the same PRD. Each piece needs manual verification.

The workflow matters: Using Claude for planning and Copilot for implementation created a clear mental separation. Having the PRD and task checklist at the project root meant both I and the AI had a shared reference point.

Time savings are real but not absolute: What might have taken a month took a weekend plus ongoing debugging sessions. The AI didn't reduce the work to zero, but it significantly compressed the initial development phase.

Next Steps

The infrastructure is in place, but the actual bots haven't been implemented yet. The next phase is to build specific bot integrations that leverage the generic APIs and callback system I've set up. This is where the project transitions from platform to practical automations.

The modular architecture means I can now experiment with different bot behaviors without touching the core WhatsApp service. Each bot can be a separate integration that communicates through the established API contracts.

This project is hosted on my homelab. The experience reinforced that AI tools are powerful accelerators for side projects, but they work best when paired with clear planning, focused prompts, and thorough code review.